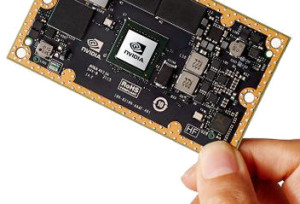

An affordable credit card-sized supercomputer by NVIDIA

NVIDIA announced yesterday the Jetson TX1, a small form-factor Linux system-on-module, credit card sized for various application ranging from autonomous navigation to deep learning-driven inference and analytics.

It will soon be available as development kit, e.g. a mini-ITX carrier board that includes the pre-mounted module and has low power consumption which provides an out of the box desktop user experience (it comes with a linux’s ubuntu custom distribution). Unfortunately, the development kit requires a USB hub to work with a keyboard and a mouse and the 16GB eMMC memory storage is probably too few.

Since I really enjoyed performing artificial intelligence at the university and during an experience as contractor in a public research center, I think I will ask the developer kit for christmas. I plan to use it as media center, intelligent home automation and for personal deep learning projets.

You may wonder why I chose this solution? Just because this card packs several interesting characteristics:

- a Tegra X1 SoC : an ARM A57 CPU and a Maxwell-based GPU packing 256 CUDA cores (delivering 1 teraflop at 5.7W, i.e. the same peak speed as a small 15 years old supercomputer!)

- 4GB of RAM shared between the CPU and GPU

It sounds interesting to me.